In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one’s beliefs about this quantity before some evidence is taken into account.

The implementation goes like:

class Bayes:

def __init__(self):

self.data = dataSet = [

['青绿', '蜷缩', '浊响', '清晰', '凹陷', '硬滑', 0.697, 0.460, '好瓜'],

['乌黑', '蜷缩', '沉闷', '清晰', '凹陷', '硬滑', 0.774, 0.376, '好瓜'],

['乌黑', '蜷缩', '浊响', '清晰', '凹陷', '硬滑', 0.634, 0.264, '好瓜'],

['青绿', '蜷缩', '沉闷', '清晰', '凹陷', '硬滑', 0.608, 0.318, '好瓜'],

['浅白', '蜷缩', '浊响', '清晰', '凹陷', '硬滑', 0.556, 0.215, '好瓜'],

['青绿', '稍蜷', '浊响', '清晰', '稍凹', '软粘', 0.403, 0.237, '好瓜'],

['乌黑', '稍蜷', '浊响', '稍糊', '稍凹', '软粘', 0.481, 0.149, '好瓜'],

['乌黑', '稍蜷', '浊响', '清晰', '稍凹', '硬滑', 0.437, 0.211, '好瓜'],

['乌黑', '稍蜷', '沉闷', '稍糊', '稍凹', '硬滑', 0.666, 0.091, '坏瓜'],

['青绿', '硬挺', '清脆', '清晰', '平坦', '软粘', 0.243, 0.267, '坏瓜'],

['浅白', '硬挺', '清脆', '模糊', '平坦', '硬滑', 0.245, 0.057, '坏瓜'],

['浅白', '蜷缩', '浊响', '模糊', '平坦', '软粘', 0.343, 0.099, '坏瓜'],

['青绿', '稍蜷', '浊响', '稍糊', '凹陷', '硬滑', 0.639, 0.161, '坏瓜'],

['浅白', '稍蜷', '沉闷', '稍糊', '凹陷', '硬滑', 0.657, 0.198, '坏瓜'],

['乌黑', '稍蜷', '浊响', '清晰', '稍凹', '软粘', 0.360, 0.370, '坏瓜'],

['浅白', '蜷缩', '浊响', '模糊', '平坦', '硬滑', 0.593, 0.042, '坏瓜'],

['青绿', '蜷缩', '沉闷', '稍糊', '稍凹', '硬滑', 0.719, 0.103, '坏瓜'],

]

self.labels = ['色泽', '根蒂', '敲击', '纹理', '脐部', '触感', '密度', '含糖率']

self.mean = [self.__mean(6, '好瓜'), self.__mean(7, '好瓜'), self.__mean(6, '坏瓜'), self.__mean(7, '坏瓜')]

self.std = [self.__sigma(6, '好瓜'), self.__sigma(7, '好瓜'), self.__sigma(6, '坏瓜'), self.__sigma(7, '坏瓜')]

def priorProbability(self, cls):

count = 0

for item in self.data:

count = count + 1 if item[-1] == cls else count

return (count) / (len(self.data))

def conditionProbabilityDiscrete(self, label, prop, cls):

count = 0

for item in self.data:

count = count + 1 if item[-1] == cls and item[self.labels.index(label)] == prop else count

return (count) / (self.__takeList().count(cls))

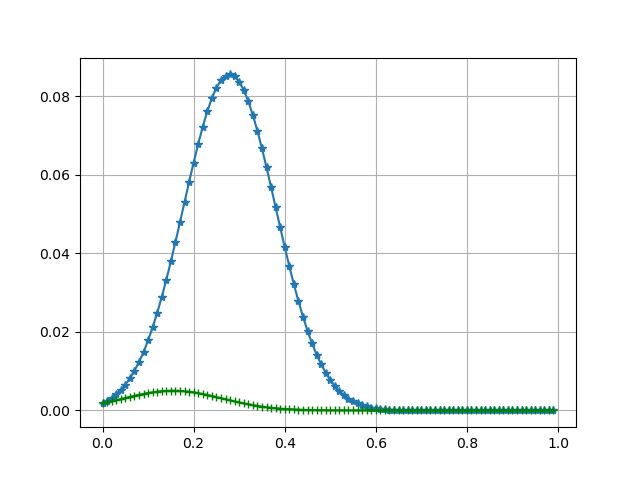

def conditionProbabilityContinuous(self, prop, pos):

val = (1 / (math.sqrt(2 * numpy.pi) * self.std[pos])) * numpy.exp(

-(numpy.power(prop - self.mean[pos], 2) / (2 * numpy.power(self.std[pos], 2))))

return val

def __takeList(self):

list = []

for item in self.data:

list.append(item[-1])

return list

def __mean(self, pos, cls):

list = []

if type(self.data[0][pos]) is not type(1.0):

raise ValueError("Property is not continuous!")

for item in self.data:

if item[-1] == cls:

list.append(item[pos])

return numpy.mean(list)

def __sigma(self, pos, cls):

list = []

if type(self.data[0][pos]) is not type(1.0):

raise ValueError("Property is not continuous!")

for item in self.data:

if item[-1] == cls:

list.append(item[pos])

return numpy.std(list, ddof=1)

def bayesClassify(self, inst):

if len(inst) != len(self.data[0]) - 1:

raise ValueError('Input data invalid!')

prob = [self.priorProbability('好瓜'), self.priorProbability('坏瓜')]

for i, item in enumerate(inst):

if type(item) == type(' '):

prob[0] *= self.conditionProbabilityDiscrete(self.labels[i], item, '好瓜')

prob[1] *= self.conditionProbabilityDiscrete(self.labels[i], item, '坏瓜')

prob[0] *= self.conditionProbabilityContinuous(inst[6], 0)

prob[0] *= self.conditionProbabilityContinuous(inst[7], 1)

prob[1] *= self.conditionProbabilityContinuous(inst[6], 2)

prob[1] *= self.conditionProbabilityContinuous(inst[7], 3)

#if prob[0]>prob[1]:

# print('好瓜')

#else:

# print('坏瓜')

return probThe raw implementation gives the product of prior probability and conditional probabilty.

However, when there’s no counterpart in the training set, the answer will be 0.

So we modified the calculations of prior probabilty and conditional probabilty.

def priorProbability(self, cls):

count = 0

for item in self.data:

count = count + 1 if item[-1] == cls else count

return (count+1) / (len(self.data)+2)

def conditionProbabilityDiscrete(self, label, prop, cls):

count = 0

for item in self.data:

count = count + 1 if item[-1] == cls and item[self.labels.index(label)] == prop else count

return (count+1) / (self.__takeList().count(cls)+3)Under the same sample, it comes the proper answer.

The mathematical inference goes like:

\mathsf{Prior Prob:} \hat P(c) = \frac{|D_c|+1}{|D|+N}\mathsf{Condtional Prob:} \hat P(x_1|c) = \frac{|D_{c,x_i}|+1}{|D_c|+N_i}